Large-scale neural networks have revolutionized generative models by granting them an unprecedented ability to capture complex relationships among varies variables. Among this model family, diffusion models stand out as a power approach for image generation. Nevertheless, when dealing with discrete data, diffusion models still fall short of the performance achieved by autoregressive models.

Alex Graves, a renowned researcher in the field of machine learning, the creator of Neural Turing Machines (NTM), and one of the pioneers behind differentiable neural computers, published a new paper Bayesian Flow Networks as the lead author. In the paper, the research team presents Bayesian Flow Networks (BFNs), a novel family of generative model manipulates parameters of the data distribution rather than operating on noisy data, which provides an effective solution to deal with discrete data.

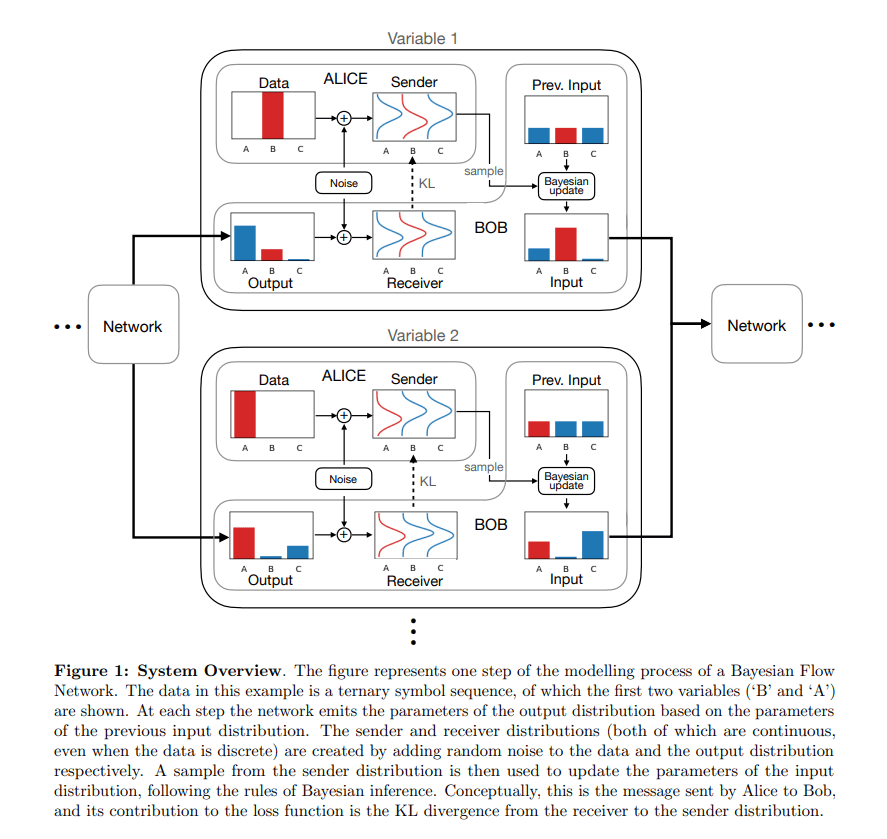

BFNs can be summarized as a transmission scheme. At each transmission step, the distribution parameters (e.g. the mean of a normal distribution, the probabilities of a categorical distribution) are fed into the neural network as inputs, then the network outputs the parameters of a second distribution, which is referred as the “output distribution”. Next, a “sender distribution” is created by adding noise to the data. Finally, a “receiver distribution” is generated by convolving the output distribution with the same noise distribution in the ‘sender distribution’.

Intuitively, the sender distribution is created to be used if the value was correct. If it is being used, all the hypothetical sender distributions will be summed over and being weighted by the probability of the corresponding value under the output distribution. And a sample from the sender distribution will be selected to update input distribution conditioned on the rules of Bayesian inference. After the Bayesian update is complete, the parameters of the input distribution will be again fed into the network to return the parameters of the output distribution.

As a result, the generative process of the proposed Bayesian flow networks is fully continuous and differentiable as the network operates on the parameters of a data distribution rather directly on the noisy data, therefore it is also applicable for discrete data.

In their empirical study, the researchers show that BFNs surpasses all known powerful discrete diffusion models on the text8 character-level language modelling task. The team hopes their work will inspire fresh perspectives and encourage more research on generative models.

The paper Bayesian Flow Networks on arXiv.

Author: Hecate He | Editor: Chain Zhang

We know you don’t want to miss any news or research breakthroughs. Subscribe to our popular newsletter Synced Global AI Weekly to get weekly AI updates.

Pingback: Alex Graves’s Team Latest Work, Bayesian Flow Networks Address Discrete Data Generation Issues

Pingback: Alex Graves’s Team Latest Work, Bayesian Flow Networks Address Discrete Data Generation Issues – Ai Headlines