It is relatively easy for human eyes to perceive and understand transparent objects such as windows, plastic bottles, glass ornaments, etc. However, 3D sensors were taught to identify objects that reflect light — particularly those that do so evenly from all angles. Such sensors can struggle with see-through objects that refract and reflect light in less predictable ways. To deal with this, Google teamed up with researchers from San Francisco synthetic data technology startup Synthesis AI and Columbia University to introduce a deep learning approach called ClearGrasp as a first step to teaching machines how to “see” transparent materials.

ClearGrasp was trained to identify transparent objects with the correct depth. The researchers say the process can not only detect transparent objects, but will also help with grasp planning by recovering “detailed non-planar geometries, which are critical for manipulation algorithms.” There are two steps:

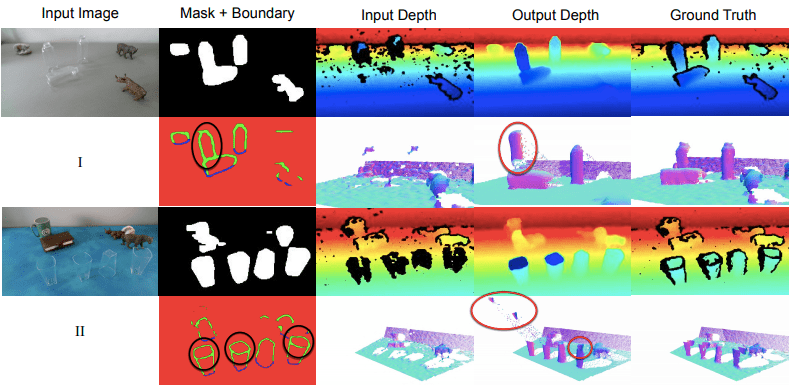

- Input colour image to deep convolutional network and perform surface normals estimation, occlusion boundaries detection, and transparent object segmentation;

- Replace the mask with initially input depth image, and feed the modified depth information along with surface and boundary information into global optimization.

The surface normals guide the reconstruction of depth into the shape, while the occlusion boundaries maintain the distinctions. Because researchers could not find a suitable existing dataset for training and testing the ClearGrasp algorithm, they made another key contribution to the research field by building a large-scale synthetic training dataset with more than 50,000 RGB-D images. They also created a real-world test benchmark with 286 RGB-D images of transparent objects and their ground truth geometries.

Researchers compared the ClearGrasp approach with DenseDepth, a SOTA monocular depth estimation method that uses a deep neural network to directly predict the depth value from colour images, with ClearGrasp significantly outperforming DenseDepth on ability to generalize to previously unseen object shapes.

Putting their algorithm into action, the researchers incorporated ClearGrasp into a robotic picking system. The arm was tasked with identifying and grasping various transparent objects on a table within the robot’s workspace, using RGB-D camera images and 500 trial-and-error grasping attempts on a state-of-the-art grasping algorithm.

ClearGrasp showed its potential in practical pick-and-place applications, with researchers reporting a significant improvement in grasping success rate: from 64 percent to 86 percent for suction and from 12 percent to 72 percent for parallel-jaw grasping.

The researchers acknowledge ClearGrasp has its limitations and there is room for improvement for example in dealing with diverse lighting conditions and cluttered environments, but conclude the method successfully combines synthetic training data and multiple sensor modalities to infer accurate 3D geometry of transparent objects for manipulation.

The paper ClearGrasp: 3D Shape Estimation of Transparent Objects for Manipulation is on arXiv. More information on the results can be found on the project page. The code, data, and benchmark have been released on GitHub.

Author: Reina Qi Wan | Editor: Michael Sarazen

Pingback: ‘ClearGrasp’ Helps Robots See and Grasp Transparent Objects - AI Bits - News, Code, Discussions, Community

Ah robots, they can do everything and very little at the same time!

I will really subscribe for additional author’s books. That one has just blown up my own mind!

From the the previous post which made the exact same saying was of the guys https://iwannasurf.ch/farm-slots/. I am so glad, you have

made my own day! Proceed at exactly the exact same pace and

style, thank you.