Since their introduction in 2020, vision transformers (ViTs) have become a leading computer vision architecture, achieving state-of-the-art performance on tasks ranging from object detection and image recognition to semantic segmentation. But understanding the internal mechanisms that contribute to ViTs’ success — what and how they actually learn from images — remains challenging.

In the new paper What Do Vision Transformers Learn? A Visual Exploration, a research team from the University of Maryland and New York University uses large-scale feature visualizations from a wide range of ViTs to gain insights into what they learn from images and how they differ from convolutional neural networks (CNNs).

The team summarizes their main contributions as follows:

- We observe that uninterpretable and adversarial behaviour occurs when applying standard methods of feature visualization to the relatively low-dimensional components of transformer-based models, such as keys, queries, or values.

- We show that patch-wise image activation patterns for ViT features essentially behave like saliency maps, highlighting the regions of the image a given feature attends to.

- We compare the behaviour of ViTs and CNNs, finding that ViTs make better use of background information and rely less on high-frequency, textural attributes.

- We investigate the effect of natural language supervision with CLIP on the types of features extracted by ViTs. We find CLIP-trained models include various features clearly catered to detecting components of images corresponding to caption text, such as prepositions, adjectives, and conceptual categories.

As with conventional visualization methods, the team uses gradient steps to maximize feature activations from random noise. To improve image quality, they penalize total variation (Mahendran & Vedaldi, 2015) and adopt Jitter augmentation (Yin et al., 2020), ColorShift augmentation, and augmentation ensembling (Ghiasi et al., 2021) techniques.

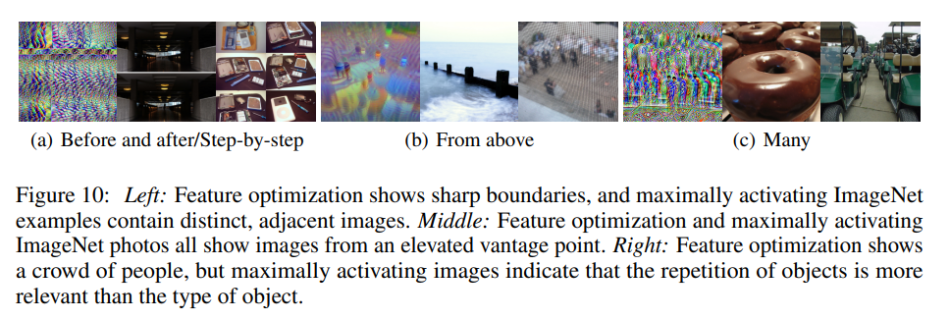

To enable a deeper understanding of a given visualized feature’s content, the team pairs each visualization with images from the ImageNet validation/training set that have the strongest activation effect with regard to the relevant feature. They plot the feature’s activation pattern by passing the most activating images through the ViT network and displaying the resulting pattern of feature activations.

The team first attempts to visualize features of the ViT’s multi-headed attention layer — including keys, queries, and values — by maximizing the activated neurons. Then they study the preservation of patch-wise spatial information from the visualizations of patch-wise feature activation levels, finding, surprisingly, that although every patch can influence the representation of every other patch, the representations remain local. This indicates that ViTs learn and preserve spatial information from scratch.

The team also discovers that this preservation of spatial information is abandoned in the network’s last attention block, which acts similarly to average pooling. They deduce that the network globalizes information in the last layer to ensure that the class token (CLS) has access to the entire image, concluding the CLS token plays a relatively minor role in the overall network and is not used for globalization until this last layer.

In their empirical study, the researchers find that the high-dimensional inner projections of ViTs’ feed-forward layers are suitable for producing interpretable images, while the key, query, and value features of self-attention are not. In CNN vs ViT comparisons, the team observes that ViTs can better utilize background information and make vastly superior predictions. ViTs trained with language model supervision are also shown to obtain better semantic and conceptual features.

Overall, this work employs an effective and interpretable visualization approach to provide valuable insights into how ViTs work and what they learn.

The code is available on the project’s GitHub. The paper What Do Vision Transformers Learn? A Visual Exploration is on arXiv.

Author: Hecate He | Editor: Michael Sarazen

We know you don’t want to miss any news or research breakthroughs. Subscribe to our popular newsletter Synced Global AI Weekly to get weekly AI updates.

Pingback: Maryland U & NYU's Visual Exploration Reveals What Vision Transformers Learn | Synchronized -

Pingback: Top Social Media Trends 2023 to Stay Ahead of the Game

شكرا

In sum, this work uses a powerful and comprehensible visualization strategy to shed light on the inner workings of ViTs and the lessons they acquire.

Thanks for the valuable information and insights.

You have to tap the screen so that the bird flaps its wings, trying to keep a steady rhythm in order to pass through the pipes scattered through its path.