DeepMind bot AlphaStar has scored a convincing 10/10 victory against pro human players in a special series of StarCraft II matches. Plucky 26 year-old Polish gamer Grzegorz “MaNa” Komincz however salvaged a bit of human pride, snatching a surprise win yesterday in a live rematch at the DeepMind and Blizzard Entertainment Starcraft II Demonstration live stream event hosted in London.

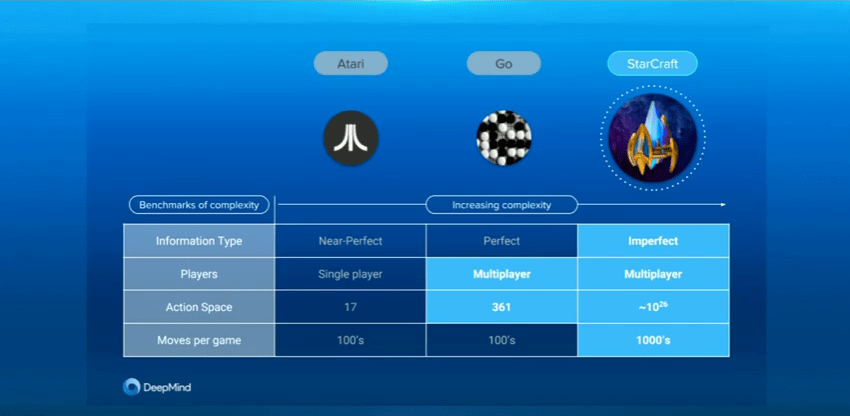

Alphabet AI company DeepMind wowed the machine learning community in 2017 when its bot AlphaGo trounced Go Master Ke Jie in a Beijing showdown. DeepMind’s StarCraft challenge is the latest in a growing number of AI ventures into complex video game environments, where agents must deal with hidden information, vast state and action spaces, and the need to act quickly.

AlphaStar made its ring entrance first, followed by Dairo (TLO) Wünsch and Grzegorz “MaNa” Komincz. The pair are StarCraft II professionals playing for the elite Team Liquid, and took turns behind the anchor desk to break down their matches against AlphaStar. Ten games were featured, all won by AlphaStar, all replays of contests actually held in mid December.

But what about the “live” demonstration? I asked myself the same question as I sat in front of my computer for two and half hours until the final and only live demonstration game began. (We’ll get to that.)

StarCraft, RTS Games and AI Research

StarCraft is a classic 1v1 competitive video game series ranked among the top PC games. As DeepMind Co-Founder & CEO Demis Hassabis tweeted before the event: “The complex real-time strategy game StarCraft II is a long-standing grand challenge for AI – excited to show our progress.” A DeepMind blog post elaborated on the challenge the game presents: “To win, a player must carefully balance big-picture management of their economy – known as macro – along with low-level control of their individual units – known as micro.”

DeepMind Research Scientist Oriol Vinyals told the audience another reason for choosing StarCraft as a research environment is “in StarCraft you don’t see the board all the times. There’s this notion of imperfect information. That means that major task is to predict and estimate what the other player is doing all the time.” This “imperfect information” refers to crucial information hidden from StarCraft players which requires “scouting” to be discovered. The phenomenon that can also manifest as “fog” on video game maps.

StarCraft gameplay involves first choosing to play as one of three alien races (Zerg, Protoss or Terran), which have different and specialized characteristics and abilities. Professional players such as TLO and MaNa typically develop and refine their styles by focusing on a particular alien race — for example TLO tends to specialise in Zerg, and MaNa in Protoss. Starting with a number of basic worker units, each player then collects resources to develop units, structures, technologies and capabilities that can help them defeat enemies.

StarCraft debuted 1998, and players have been participating in different formal and informal competitions ever since. AI and ML researchers have joined via the AIIDE bot competition, which aims to “foster and evaluate progress of AI research applied to real-time strategy (RTS) games.” Memorial University of Newfoundland Assistant Professor David Churchill organizes the annual StarCraft AI competition associated with AIIDE (AI for Interactive Digital Entertainment Conference).

So far, top human gamers had maintained their edge against bots in RTS games, a recent example being last summer’s loss by OpenAI bots against human players at the Dota 2 International in Vancouver.

The Match rules

- The games were played on a relatively old version of StarCraft II – version 4.6.2, and the game map was Catalyst LE.

- The match-ups were all Protoss Vs Protoss as this is currently the only Alien race AlphaStar can play

- AlphaStar played with a limited actions per minute condition to prevent it from clicking “insanely fast.”

- Five games per Match

AlphaStar’s 10 wins against TLO and MaNa seemed to suggest AI had turned the corner on complex video games. But gamers and gaming experts immediately began to debate whether the human players might have suffered unfair disadvantages in the AlphaStar matches. For example, the AlphaStar agent can view the entire game map at once; while human players cannot, and must navigate over the map manually to view particular areas. AlphaStar can be also be affected by fog of war, but as Co-Lead of the AlphaStar project David Silver explained, “[AlphaStar] gets to see any enemy units which are actually not obscured by fog of war, or not invisible. Anything which can be seen by human player if they were moving the camera to that position. ALL of that information is available to AlphaStar straight away if it is zoomed out.”

In StarCraft II, gameplay speed is measured by APM (Actions Per Minute). The graph below shows that overall, AlphaStar performed considerably fewer APMs than a human pro like TLO would. This suggests the AI makes more efficient decisions.

During the matches, AlphaStar was able to gather resources at a higher rate than the humans. AlphaStar’s reaction time — measured from when it first observes an opponent’s move and processes it via neural network to when it executes its response — is around 350 milliseconds. “That’s on the slow side of human players,” according to Silver.

TLO, who usually plays the Zerg race, told game commentator Artosis he “made many mistakes here because I’m not a Protoss player.” TLO prepared for the match by playing about a hundred games as Protoss, and estimated his skill level with Protoss at “not pro level, but it would still probably be the top percentile of players on the planet. It’s decent.”

The TLO vs AlphaStar match that streamed yesterday actually took place on December 12, 2018. “Hopefully I’ll get a rematch where I can play my strongest race,” said TLO afterward.

Seven days after AlphaStar defeated TLO, DeepMind invited StarCraft pro MaNa — who is normally a Protoss player — to test his skills against their bot. “I wasn’t expecting the AI to be that good. Everything [AlphaStar] did was proper, It was calculated, and it was done well. I thought I’m learning something,” said MaNa in defeat.

One example of AlphaStar’s excellent command came in a game when MaNa and the bot had almost the same resources, but AlphaStar managed to move ahead by outperforming MaNa at micro tasks — essential techniques such as low-level control of individual units such as Stalkers. Quipped commentator Artosis during the action, “Really nice micro management coming in from AlphaStar, forcing back three Stalkers to three Stalkers, whoever can control better is going to take that advantage.” Although AlphaStar’s attack seemed a bizarre strategy to many viewers, the bot’s superhuman micro-control eventually turned the battle around.

First Human Victory!

MaNa finally got his chance at a rematch during the live stream, predicting “This is going to be a revenge, I hope.” To the delight of viewers, MaNa quickly spotted a shortcoming in his opponent. Although AlphaStar has outstanding micro control and resource distribution techniques, the bot loses some of its flexibility when pushed out of its comfort zone.

MaNa employed Stalkers in a distract-ant-attack strategy. “If MaNa can continue to lure AlphaStar back, that’s the way MaNa can actually gain advantage,” commented Artosis as the strategy unfolded, “MaNa has been playing brilliantly. The Stalkers continually coming back and forth. AlphaStar either doesn’t know or doesn’t care about the observer that has been watching its movement.” MaNa’s excellent distribution of Stalkers pressured AlphaStar to fight back, which slowed down its economic development. MaNa exploited the flaw, and as AlphaStar made the same mistake over and over again, MaNa swept in and snapped the victory flag from AlphaStar’s base.

There is an important caveat to MaNa’s victory: AlphaStar played this match restricted by a windowed view of the game map.

Training – The AlphaStar league

A deep neural network generates AlphaStar’s behavior. Researchers input data from the raw game interface into the network, which produces a series of instructions used to compose an action within the game. DeepMind says AlphaStar also employs a brand new multi-agent learning algorithm. The partnership allowed DeepMind researchers to train the neural network with supervised learning from anonymised Blizzard human games. In this way, AlphaStar could leverage imitation learning to obtain knowledge of basic micro and macro strategies. DeepMind says “this initial agent defeated the built-in “Elite” level AI – around gold level for a human player – in 95% of games.”

Researchers created an “AlphaStar League” where a multi-agent reinforcement learning process pitted agents against each other as competitors, with the best advancing. “Finally at the end of the AlphaStar League, we selected the least exploitable set of five agents out of the league, that’s something we call the Nash of the league. And that’s the five different agents that here and now we’re actually playing,” explained Silver.

Training took only a week, during which time the top bots has accumulated the equivalent of 200 years of human play, according to Vinyals.

DeepMind stresses that the goal of the AlphaStar project wasn’t to make AI to beat humans at classic video games, but to use the game’s complexity to find alternative ways to train AI agents. After AlphaGo, AlphaGo Zero, AlphaFold (a protein folding bot), and now AlphaStar — what’s next for the company?

Vinyals says benchmarking how DeepMind algorithms or agents perform in a wide variety of tasks is an essential step toward a single and ultimate goal: “The mission of DeepMind is to build artificial general intelligence.”

Further details about the DeepMind StarCraft II live stream and the research behind AlphaStar can be found on DeepMind’s Blog.

Journalist: Fangyu Cai | Editor: Michael Sarazen

0 comments on “GG! DeepMind Struts Its StarCraft Strength; Humans Strike Back”