The BAAI Conference 2022 kicked off at 9:00 am on May 31 in Beijing and ran through June 2. AI experts, industry leaders, young talents and international delegates joined the virtual gathering and live stream for three busy days of high-level keynotes, tech talks, parallel forums and networking. The conference addressed a variety of contemporary AI challenges, from large-scale multimodal models and neurocomputers to AI for science and autonomous driving and much more.

The host, Beijing Academy of Artificial Intelligence (BAAI), is a well-established non-profit research institute that promotes strategic collaborations between academia and industry to bridge the gap between pioneering AI research and cutting-edge applications in complex real-world scenarios.

China’s most influential AI academic conference, BAAI 2022 welcomed over 200 domestic and international experts and scholars, including Israeli cryptographer and 2002 Turing Award Laureate Adi Shamir, DeepMind Distinguished Research Scientist Richard Sutton, Gödel Prize Laureate Cynthia Dwork, Head of Hugging Face Research Douwe Kiela, Head of OpenAI Research Jeff Clune, University College London neuroscientist Karl Friston, and UC Berkeley computer scientist Michael I. Jordan.

Following the conference’s opening remarks, BAAI Dean and Peking University Professor TieJun Huang took the stage to introduce three important BAAI advancements: MetaWorm 1.0 (Tian Bao), the AI Chip Ecosystem Laboratory & Jiuding AI-Computing Platform, and the latest applications powered by China’s first homegrown super-scale intelligent model, WUDAO big model.

MetaWorm 1.0

BAAI Life Simulation Research Center Director Lei Ma described MetaWorm 1.0 as a computational model of the Caenorhabditis elegans (C. elegans) nematode with the “most detailed” nervous system in synergy with a digital body built on 96 muscles and interactive with a three-dimensional fluid simulation environment in real-time. This digital “Worm” has achieved forward worming in the simulation environment and represents a milestone in the eVolution project. MetaWorm 1.0 exhibits behaviours that parallel C. elegans in the real world, and the next goal on the eVolution project roadmap will be to have it demonstrate more complex intelligent behaviours such as avoidance response and optimal foraging.

As MetaWorm 1.0 evolves to 2.0 and 3.0, the goal is to have it “gradually becoming intelligent.” Using neuron circuits from small animals to investigate what we can learn from nature to improve artificial intelligence technologies has always been a promising approach. For example, a 2020 study published in Nature Machine Intelligence showed how a novel AI system with 19 control neurons inspired by the brains of C. elegans could enable high-fidelity autonomy for task-specific parts of complex autonomous systems such as steering commands.

In summary, MetaWorm 1.0 is the most biologically accurate computational model of C. elegans and has achieved the following breakthroughs:

- MetaWorm 1.0 shows a complete simulation of all 302 neurons and their connection patterns and complex relationships in C. elegans.

- It has completed a high-precision simulation of a sensorimotor circuit composed of 106 olfactory sensory neurons.

- The digital body of MetaWorm was built based on 96 muscles in simulation that are anatomically accurate.

- Real-time interaction between MetaWorm 1.0 and the three-dimensional fluid simulation environment.

- Through MetaWorm 1.0, a fully closed-loop simulation of C. elegans and the simulation environment is achieved in a computational model.

AI Chip Ecosystem Lab and Jiuding AI-Computing Platform

Significant AI technical innovations will often occur along with breakthroughs in infrastructure technologies. Reflecting this, BAAI announced the AI chip Ecosystem Lab and Jiuding AI-Computing Platform, which researchers can use to boost AI evolution with cross-layer innovation. Jiuding has the world’s largest Chinese dataset for AI training, and will provide 1000P computation capacity and 400Gbps high-speed interconnection per server to support AI research activities. To enable Jiuding to effectively run on AI chipsets from different vendors, BAAI researchers are exploring an adaptation layer with self-learning and self-adaptation capabilities which can automatically find the most suitable computing resource for different tasks. BAAI Chief Engineer Yonghua Lin explained, “With more and more AI chips being delivered into the market, AI platform infrastructure will be required to support AI chips of various architectures and different capabilities. So we need to explore automatic technologies to help the industry solve the adaptation problem. It will be a kind of AI for AI system innovation. And it will be critical for the AI chip industry as well.”

At the conference, BAAI announced the open-sourcing of FlagAI (FeiZhi), an algorithm and tools project designed to support mainstream large-scale multimodal foundation models and simplify their trial and development. BAAI strongly believes in the benefits of open science and has consistently promoted an open-source approach to the innovation of AI algorithms. The FlagAI project has been launched on GitHub with plenty of tutorials and examples to support research in the global open-source community. Additional FlagAI features and algorithms will be added in future releases.

Big Model Applications in the Real World

The focus of the Wu Dao project for this year is to continue close collaborations with various companies in the fields of lifestyle, art and language to expedite its application on complex real-world tasks.

After working with OPPO, TAL Education Group, Taobao and Sogou, the Wu Dao project’s partnership with Meituan produced a large-scale multimodal model that provides some 700 million users daily with conveniences in search ads, smart assistants and fine-grained sentiment analysis, boosting Meituan’s search ads revenue by 2.7%.

Last month, BAAI released CogView2 (Synced coverage). At the conference, the CogView team showcased CogVideo as its next big breakthrough CogVideo is a 9B-parameter large-scale pretrained text-to-video transformer model, trained by inheriting a pretrained text-to-image model, CogView2.

The Wu Dao project team is also carrying out a number of strategic collaborations with world-renowned organizations and institutes. It is currently working with the Arab Academy for Science, Technology & Maritime Transport and the Bibliotheca Alexandrina to build the world’s largest Arabic language dataset. The joint effort is aimed at developing large-scale Arab language models and applications for solving complex real-world challenges.

Synced‘s BAAI 2022 keynote and other presentation highlights are summarized below.

The Increasing Role of Sensorimotor Experience in Artificial Intelligence

Richard Sutton | DeepMind Distinguished Research Scientist

Sutton started his talk with an essential question: Will intelligence ultimately be explained in objective terms such as states of the external world, objects, and people; or in experiential terms such as sensations, actions, and rewards? He noted that experience has played an increasing role in this consideration over AI’s seven decades and identified four significant steps in which AI has turned toward experience to be more grounded, learnable, and scalable.

- Step 1: Agenthood (having experience)

- Step 2: Reward (goals in terms of experience)

- Step 3: Experiential state (state in terms of experience)

- Step 4: Predictive knowledge (to know is to predict experience)

Sutton posited that the alternative to the objective state is the experiential state, where the world is defined entirely in terms of experiences that are useful for predicting and controlling future experiences. He proposed the experiential state be recursively updated, and that by combining all experiential steps, a standard model of the experiential agent can be obtained.

Sutton also offered a philosophical take on the matter: “Experience offers a path to knowing the world: if any fact about the world is a fact about the experience, then it can be learned and verified from experience.” Despite Steps 3 and 4 being far from complete, he noted that many AI research opportunities remain, and the story of intelligence may one day be told in terms of sensorimotor experience.

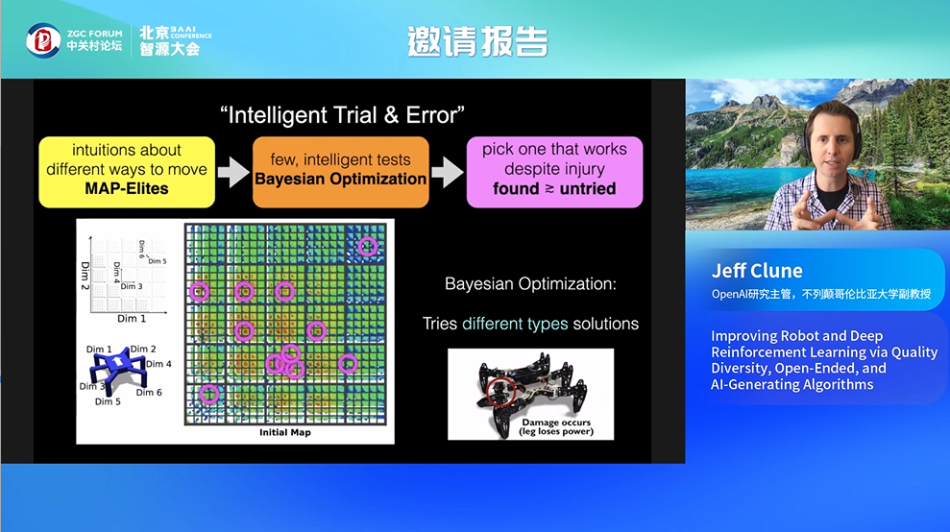

Improving Robot and Deep Reinforcement Learning via Quality Diversity, Open-Ended, and AI-Generating Algorithms

Jeff Clune | OpenAI Research Team Lead

Jeff Clune shared work that essentially represents two different approaches to reinforcement learning (RL):

- Quality Diversity Algorithms

- Open-Ended Algorithms

But how might we accomplish the grandest ambitions of very powerful AI? Clune’s answer was a novel paradigm: AI-Generating Algorithms.

- Quality Diversity Algorithms

- Intelligent Trial & Error

- Go-Explore

- Open-ended Algorithms

- POET

Clune suggested we look at the history of how science progresses via innovations. It is critical that science and technological innovation generate problems and simultaneously carry out goal switching. But what exactly does this mean? Clune explained that the only way to solve complex problems is by creating problems while we simultaneously solve them and performing goal switching between them. For example, when the only known cooking method was heating a hanging pot, if scientists were rewarded only if they could produce the fastest hot food with less smoke, then the microwave oven would never have been invented because its related radar technology would not have been developed.

How can we get our algorithms to do “goal switching” and realize such “serendipitous discoveries”?

A family of algorithms called Quality Diversity Algorithms stands out. Presently, one of the most popular algorithms of this type is MAP-Elites, which returns an entire set of high-quality solutions that Clune says are “the best you can find.”

An illustrative outcome and takeaway from the MAP-Elites algorithm? The 2015 paper published in Nature,Robots that can adapt like animals, which introduces “an intelligent trial-and-error algorithm that allows robots to adapt to damage in less than two minutes, without requiring self-diagnosis or pre-specified contingency plans.”

When facing the dual challenges of detachment and derailment, the Go-Explore algorithm comes into play. Go-Explore’s strategy is carried out in two phases. It first initializes itself by taking random actions, storing the states visited, and starting a simple loop until the problem is solved. It then robustifies the solutions into a deep neural network via imitation learning.

“In my ideal world, algorithms do not require domain knowledge but will take advantage of it,” Clune added. This view reflects the improved results of the Go-Explore algorithm, which continues to demonstrate the value of Quality Diversity algorithms for hard-exploration problems and inspire new and novel research directions. “Collecting a diverse repertoire of high-quality entities gives you a lot of fuel to power other algorithms downstream.”

“Traditional machine learning usually relies on human efforts to pick challenges for algorithms to solve,” Clune explained. “But it is way more interesting to me to focus on the notion of open-endless, which means algorithms are truly, endlessly innovating. If you can run them forever, they will keep doing interesting new things. An example of that would be the natural evolution, which has been going on for 3.5 billion years. The question is, can we make algorithms to do that? Another way to think about it is, could you make an algorithm to run for billion years?”

Clune proposed researchers learn from natural evolution and human culture, where innovating means generating new problems, then solving them to generate more new problems. He enthusiastically introduced the results obtained by the enhanced POET algorithm — a phylogenetic tree of the first 100 environments of a POET run. “This is one of my most favourite plots and results in my entire scientific career because I have been trying to generate this plot since I was a PhD student on Day One.” Clune said the results left him with an unforgettable impression since they resemble those from nature, exhibiting a clear signature of open-ended algorithms: multiple, deep, hierarchically nested branches.

Clune concluded by noting that although we have a long way to go before achieving artificial general intelligence (AGI), there are three pillars that could support research toward that goal:

- Meta-learning architectures

- Meta-learning learning algorithms

- Generating effective learning environments

Clune believes AI-generating algorithms would likely be the fastest path to AGI. The hypothesis is that pieces of AI are learnable, and learned solutions ultimately win out, so we should be going all-in on learning the solutions to AGI.

“These algorithms are worthwhile even if they are not the fastest path because they shed light on our origins, and they tend to be very creative and could surprise us,” Clune told the audience. “They can create an entirely new intelligence that we could never dream of and teach us what it means to be intelligent.”

Large Language Models: Will they keep getting bigger? And, how will we use them if they do?

Luke Zettlemoyer | Meta AI

“Will large language models keep getting bigger?” asked Luke Zettlemoyer, addressing a hot topic in machine learning research. As Synced previously reported, the large-scale multimodal Wu Dao model has a whopping 1.75 trillion parameters, roughly ten times that of OpenAI’s powerful GPT-3.

Today’s data-hungry language models are not just getting a lot bigger; they have also become adept zero-shot learners. Will researchers be able to keep scaling them up, or are we approaching the physical limitations of computing hardware? How can we best use these big models? And what about alternative forms of supervision?

Zettlemoyer introduced two ideas he and his research team have been investigating to enable further scaling:

- BaseLayer: balanced mixtures of experts

- DeMix Layers: domain-specific experts

Zettlemoyer noted that in expert specializations, an assignment often depends only on the previous word and corresponds to simple word clusters. Could even simpler routing work?

“When you’re trying to scale, you’d want to go simple,” Zettlemoyer suggested, noting that the DeMix Layers approach is “much much simpler, and we are currently actively trying to scale up.” Each domain has its expert, and the modular model can mix, add, or remove experts as necessary, with rapid adaptation throughout training and testing.

Zettlemoyer showcased recent studies on alternatives to fine-tuning for natural language generation tasks that keep language model parameters frozen, such as the lightweight “Prefix-Tuning” paradigm proposed by Stanford University researchers Li and Liang. These can deliver a “much better result based on a little bit of compute and careful framing,” and also enable directly learning a noisy channel prompting model for even better performance.

Zettlemoyer stressed the significance of open science to the research community and pointed out that limited access to models and restricted availability through APIs is “deeply problematic for doing good science.” He encouraged the audience to thoroughly explore research papers and look at the parameters to “see what the models are doing as much as you can.”

Zettlemoyer summarized another project Facebook AI has been actively scaling up and trying to generalize for newer methods and suggested new types of training supervisions, with images, text, and more as discrete tokens. “Text is not all you need, consider the structure and other modalities,” he concluded.

Travels and Travails on the Long Road to Algorithmic Fairness

Cynthia Dwork | Radcliffe Institute for Advanced Study

“What are risk prediction algorithms actually producing?” asked Professor Dwork to start her talk. She characterized this as a defining problem of artificial intelligence because it seeks to understand what the produced numbers mean and, more specifically, the “probability” of a non-repeatable event.

Dwork walked the audience through the landscape of previous relevant scientific research, then introduced Outcome Indistinguishability, a recent study she worked on with researchers from UC Berkeley, Stanford University, and the Weizmann Institute of Science. With regard to this “probability” function, the team’s paper argued that “prediction algorithms assign numbers to individuals that are popularly understood as individual ‘probabilities’ — e.g. what is the probability of 5-year survival after a cancer diagnosis? — and which increasingly form the basis for life-altering decisions.”

The study established the first scientific grounds for a “political argument” recommending auditors inspecting algorithmic risk prediction instruments be granted oracle access to the algorithm rather than simple historical predictions.

The talk was followed by an insightful Q&A session between Professor Dwork and BAAI Chairman of the Board HongJiang Zhang.

Professor Dwork mentioned that after working on differential privacy for many years, she wanted to come up with a new problem. Then, ten years ago, an intense and inspirational brainstorming session with a colleague from Tel Aviv University that spanned various topics drew her attention to the fairness of machine learning.

We are now at a time when computer science is increasingly involved in social science and AI ethics. For young scholars, Dwork advised, “One of my key pieces of advice is to read the news and follow what’s going on in the world. See what you like and what you don’t like. And then, for everything that you don’t like, my approach would be, how on earth can theoretical computer science contribute to this? And for the things you like, how can theoretical computer science develop this? So constantly look for how the tools you have could be relevant to the major questions you see around you.”

Dwork says paying close attention to societal affairs will enable young scholars to stay informed about the world and “ask how what you’ve learned that day in your technical studies could be relevant to the world.” After all, she added, once a scholar is trained, they become a precious resource, and their efforts and technical skills also bring an essential responsibility to society.

As a strong believer in basic research with a deep understanding of and working experiences in academia and industry, Dwork said both career paths could be great as long as they support basic research. She suggested young scholars evaluate their pursuits, “For people who are more theoretical, what’s wonderful about industry research is the opportunity to see your theoretical idea deployed on a massive scale. Think about your security and where you want to work in your career, considering the job security and risk-taking.”

Dwork believes it is vital to include algorithmic fairness and privacy among critical machine learning metrics such as accuracy. “I really believe that underlying everything is a metric. There is a notion somewhere, for a particular task, of how similar or dissimilar are each pair of individuals for this task? I would like to see a lot more work on that. I would also like to see a lot more work on the question of whether data representation itself is unfair. Everything begins with the data. So I suppose that would be the next big topic that I would like to look at, and I really encourage people watching this talk to think about that problem.“

Annual Young Scholars Forum and Meetup

In the Annual Young Scholars Forum and Meetup, HongJiang Zhang and UC Berkeley Professor Michael Jordan, who also serves on the BAAI Academic Advisory Committee, had a remarkable conversation on young scholars and career choices.

Professor Jordan cautioned young scholars not to simply chase the next big thing in machine learning research. “I prefer to go a little slower, not to have a big competition, and to work with my students at my own pace. I always try to somewhat orthogonalize with respect to where everyone else is going. We can think about decisions and uncertainty, uncertainty qualification, out-of-sample kinds of things where the distribution changes, economic incentives for data collection and sharing data, and competitive mechanisms for making decisions when there are multiple decision-makers. These are all things that happen in the real world, and they are things that there’s much less work on, but there’s not zero work. One of the reasons I choose to work in such areas is because I see that they are really important, have real-world consequences, and they’ve been neglected, so not that many people are thinking about them.“

Similar to Professor Dwork’s advice to young scholars, Jordan stressed that we must remember researchers are also real-world problem solvers. He added, “You really should think about a lifetime career. You should realize you’re not going to work on one thing. I spend at least 30% of my time learning new things or learning about topics that seem like they will be relevant to me sometime in the future.

“There are lots of great videos now, and lots of great, even undergraduate-level books on some really interesting topics. I don’t look for them to give immediately me research ideas or a payoff. But after five years or ten years, almost always, they come to be relevant.”

“So you have to be a problem solver. You can’t just come in and apply the technology and walk away and move on. You have to spend the time understanding the problem and bringing the technology to bear. I’ve done a lot of applied math, I’ve got a lot of control theory, and I’ve done a lot of statistics. So my brain has a partial understanding of all those things — and I can tell you that’s been the biggest secret of my success.”

BAAI 2022 – Expert Conference on AI

The first BAAI conference was held in October 2019. Informed by the organizers’ growing experience in fostering a welcoming environment for top talents and with a consistent focus on exploring fundamental AI technologies, BAAI 2022 proved an outstanding Expert AI Conference, attracting 30,000 registrations and 100,000 online attendees from various Chinese provinces and 47 international countries and regions..

BAAI provides valuable platforms for the ever-growing global community of scholars, students, and industry experts pursuing advancements in AI research and real-world applications, helping them to learn, share and flourish.

Author: Fangyu Cai| Editor: Michael Sarazen

Pingback: 200+ World-Class AI Experts at BAAI 2022: ‘AI Life’, Multimodal Models, AI for Science, Autonomous Driving and More! – Money Street News

How complicated is it to look through the list of argumentative research paper topics and choose the one you like the most? Surf the website to simplify your task and get inspired by the most appealing themes. https://superbgrade.com/blog/30-good-argumentative-research-paper-topics-and-ideas

Unlike many other article authors, John from Scamfighter.net reviewed my homework done neutrally. His analysis shows exactly what I got while working with them. They write quite well, but I expected more quality for the price guys charged for me.

https://scamfighter.net/review/myhomeworkdone.com

A strong resume (bestresumeservicechicago.com) is an essential component of a successful job search in Chicago. With the right approach, you can create a professional and compelling resume that showcases your skills, experience, and achievements, and helps you stand out to potential employers and increase your chances of landing an interview.

Pingback: Accademia di Intelligenza Artificiale di Pechino -

While searching for students’ essay help, I came across an insightful article on LinkedIn https://www.linkedin.com/pulse/unlocking-academic-excellence-best-writing-services-college-chacon-fuy0f/ . It provided valuable tips and strategies, enriching my approach to crafting compelling essays.

FOR PROCEDURES LIKE IF YOUVE BEEN A VICTIM OF BOGUS INVESTMENT SCHEMES ILL STRONGLY RECOMMEND A CRYPTOCURRENCY RECOVERY AGENT WHO DID GREAT IN HANDLING AND RECOVERY OF MY BITCOINS FROM THIS SCHEME.

COINSRECOVERYWORLDWIDE

H[@]T

G

MaiL

[.]

COM